Speed is one of the main concerns of machine learning algorithms developers. The native SVM

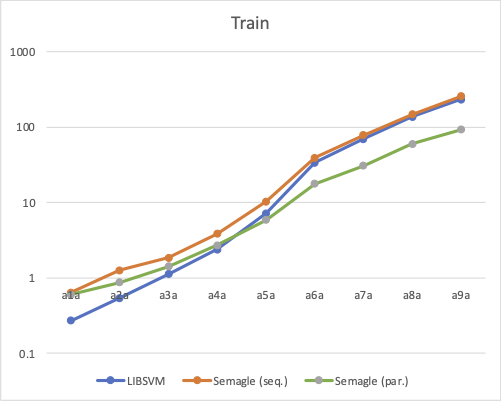

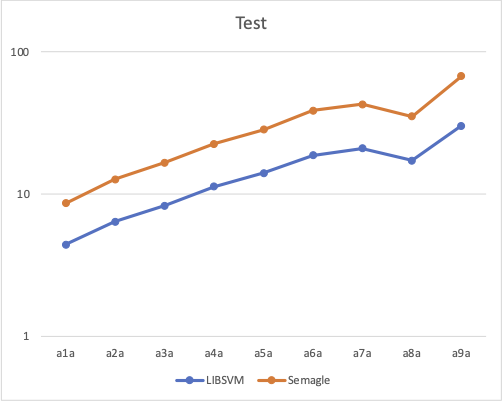

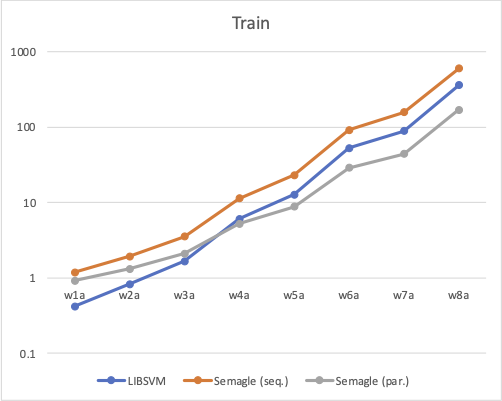

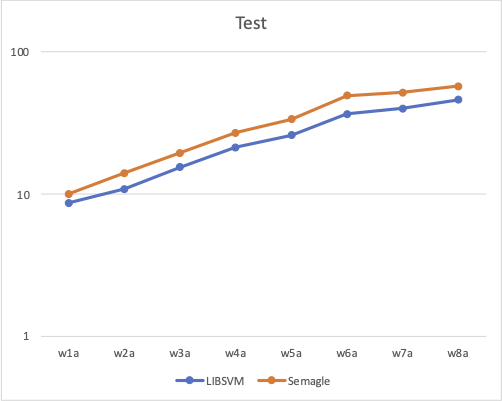

implementation (LIBSVM 3.25) is optimized for specific kernel types and demonstrates good training and test times for small and large problems. The F# implementation running on Net 5.0 runtime is 1.5 times slower for small problems but is only 10% slower for large problems. However, with parallel kernel evaluations, the F# implementation becomes faster after 5,000 training samples.

- Computer: MacBook Pro (Model A1990)

- Processor: 2.6 GHz 6-Core Intel Core i7

- Memory: 16 GB 2400 MHz DDR4

- Operating System: macOS Monterey (12.1)

- .NET Framework: Net 5.0.404

- Features: 123

- Kernel: RBF \(\gamma=1\)

- Cost: C=1

| Dataset |

| LIBSVM 3.25 |

Semagle |

| #SV |

Train |

Test |

Accuracy |

#SV |

Train (seq.) |

Train (par.) |

Test |

Accuracy |

| a1a (1,605/30,956) |

1584 |

0.27 |

4.41 |

76.29% |

1584 |

0.64 |

0.60 |

4.23 |

76.29% |

| a2a (2,265/30,296) |

2233 |

0.54 |

6.39 |

76.52% |

2228 |

1.26 |

0.87 |

6.33 |

76.53% |

| a3a (3,185/29,376) |

3103 |

1.12 |

8.28 |

76.73% |

3099 |

1.85 |

1.42 |

8.40 |

76.73% |

| a4a (4,781/27,780) |

4595 |

2.39 |

11.26 |

77.73% |

4587 |

3.83 |

2.71 |

11.32 |

77.73% |

| a5a (6,414/26,147) |

6072 |

7.23 |

14.03 |

77.89% |

6067 |

10.29 |

5.88 |

14.32 |

77.89% |

| a6a (11,220/21,341) |

10235 |

33.76 |

18.68 |

78.53% |

10233 |

39.363 |

17.72 |

20.05 |

78.53% |

| a7a (16,100/16,461) |

14275 |

69.33 |

21.00 |

79.66% |

14266 |

78.55 |

30.79 |

21.85 |

79.66% |

| a8a (22,696/9,865) |

19487 |

137.19 |

17.23 |

80.31% |

19488 |

147.98 |

60.10 |

17.90 |

80.31% |

| a9a (32,561/16,281) |

26947 |

233.00 |

30.24 |

80.77% |

26947 |

255.97 |

93.01 |

37.11 |

80.769% |

- Features: 300

- Kernel: RBF \(\gamma=1\)

- Cost: C=1

| Dataset |

| LIBSVM 3.25 |

Semagle |

| #SV |

Train |

Test |

Accuracy |

#SV |

Train (seq.) |

Train (par.) |

Test |

Accuracy |

| w1a (2,477/47,272) |

2080 |

0.42 |

8.66 |

97.30% |

2080 |

1.19 |

0.92 |

10.02 |

97.30% |

| w2a (3,470/46,279) |

2892 |

0.83 |

10.87 |

97.35% |

2892 |

1.93 |

1.32 |

14.03 |

97.35% |

| w3a (4,912/44,837) |

4018 |

1.66 |

15.46 |

97.37% |

4018 |

3.52 |

2.10 |

19.49 |

97.37% |

| w4a (7,366/42,383) |

5886 |

6.06 |

21.26 |

97.43% |

5886 |

11.3 |

5.25 |

26.96 |

97.43% |

| w5a (9,888/39,861) |

7715 |

12.70 |

25.94 |

97.45% |

7715 |

23.21 |

8.83 |

33.63 |

97.45% |

| w6a (17,188/32,561) |

12864 |

52.72 |

36.51 |

97.60% |

12864 |

92.17 |

28.85 |

49.22 |

97.60% |

| w7a (24,692/25,057) |

17786 |

88.66 |

39.91 |

97.61% |

17786 |

158.37 |

44.35 |

51.75 |

97.61% |

| w8a (49,749/14,951) |

32705 |

359.47 |

46.01 |

99.39% |

32705 |

596.78 |

169.33 |

57.29 |

99.39% |